Vantage 3.0

Introducing a hybrid approach to using Document AI and GenAI

Supercharge AI automation with the power of reliable, accurate OCR

Increase straight-through document processing with data-driven insights

Integrate reliable Document AI in your automation workflows with just a few lines of code

PROCESS UNDERSTANDING

PROCESS OPTIMIZATION

Purpose-built AI for limitless automation.

Kick-start your automation with pre-trained AI extraction models.

Meet our contributors, explore assets, and more.

BY INDUSTRY

BY BUSINESS PROCESS

BY TECHNOLOGY

Build

Integrate advanced text recognition capabilities into your applications and workflows via API.

AI-ready document data for context grounded GenAI output with RAG.

Explore purpose-built AI for Intelligent Automation.

Grow

Connect with peers and experienced OCR, IDP, and AI professionals.

A distinguished title awarded to developers who demonstrate exceptional expertise in ABBYY AI.

Explore

Insights

Implementation

Integrating LLMs

Combining a purpose-built intelligent document processing (IDP) platform with the flexibility of large language models (LLMs) allows you to move beyond standard data extraction. This hybrid approach enables advanced capabilities like summarization, contextual reasoning, and automated communication. By integrating an LLM of your choice with IDP, you can augment your existing document workflows, handle unstructured content with greater precision, and unlock new efficiencies—all within a secure, governed, and scalable environment.

Integrating an LLM with your document processing workflows delivers significant operational

advantages and accelerates your path to intelligent automation.

Go beyond simple data extraction. Use LLMs to interpret extracted information, compare values against regulations, or normalize data to industry-specific codes and classifications.

Trigger intelligent follow-up actions based on document content. For example, an LLM can automatically draft a professional email to a supplier if an invoice contains discrepancies identified by the IDP platform.

Process large volumes of unstructured text by having an LLM generate concise summaries. This enables your teams to evaluate candidate profiles, review legal clauses, or analyze reports more efficiently.

Leverage the creative capabilities of LLMs for rapid prototyping and handling highly variable or unstructured content where deterministic rules may fall short.

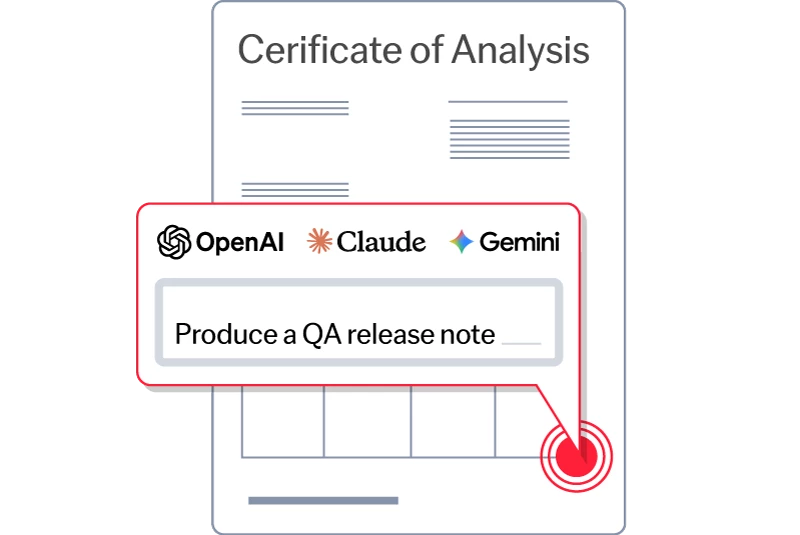

Integrating an LLM into your ABBYY document processing workflow is a straightforward process. Our open architecture allows you to bring your own LLM (OpenAI, Google Gemini, Anthropic Claude, Mistral AI, etc.) and connect it as a tool to enhance extraction, validation, and post-processing tasks, ensuring you get the best of both worlds: the structure of IDP and the flexibility of generative AI.

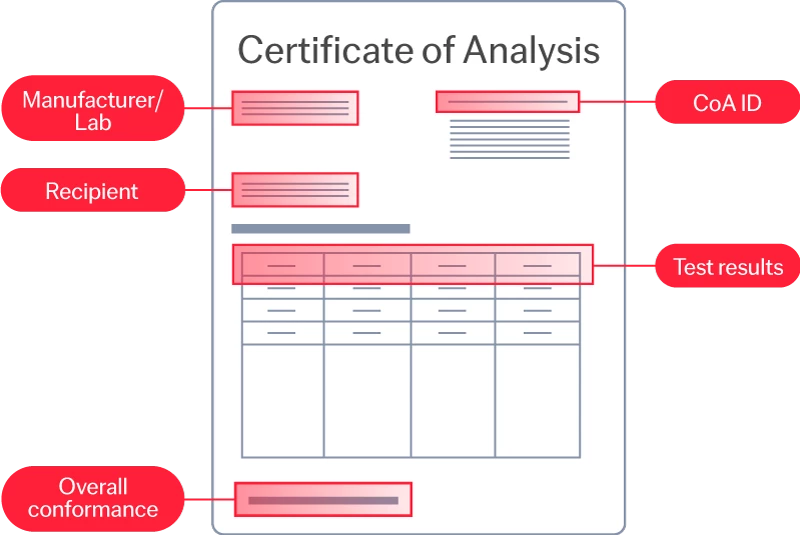

Use ABBYY’s purpose-built platform to perform initial document classification, segmentation, and data extraction. This provides a structured, accurate foundation of facts from your documents.

Send the extracted, structured data—or specific segments of the document—to your chosen LLM via a pre-built connector or API call. This targeted approach minimizes token usage and cost while reducing hallucination risk.

Leverage the LLM's output for downstream tasks such as data enrichment, generating summaries, or drafting communications, all orchestrated within your automated workflow.

Find out how Ashling developed an innovative solution using ABBYY Vantage and GPT-4 Turbo to automate the processing of 30,000 lease agreements per year for a global fast-food franchise—with 82% accuracy.

This post breaks down the difference between large language models (LLMs) and small language models (SLMs), and explains why choosing the right model, paired with high-quality data, is key to unlocking the full potential of AI for your business.

When LLMs and Document AI are used together, the strengths of one compensate for the limitations of the other. Find out how in this article.

Find out how Ashling developed an innovative solution using ABBYY Vantage and GPT-4 Turbo to automate the processing of 30,000 lease agreements per year for a global fast-food franchise—with 82% accuracy.

This post breaks down the difference between large language models (LLMs) and small language models (SLMs), and explains why choosing the right model, paired with high-quality data, is key to unlocking the full potential of AI for your business.

When LLMs and Document AI are used together, the strengths of one compensate for the limitations of the other. Find out how in this article.

Applying the wrong kind of AI to document processing—particularly for business-critical workflows—can create more problems than it solves. Get the playbook on how to combine Gen AI with Document AI for a multiplier effect.

This white paper explores the significant problems that arise from using raw, unstructured document data for LLM applications, and proposes a better way to ensure that your AI is built on a foundation of clarity and accuracy.

This article, including a demo video, uses an ABBY Vantage use case to demonstrate how these technologies can work together in a practical application, insurance claims automation.

Applying the wrong kind of AI to document processing—particularly for business-critical workflows—can create more problems than it solves. Get the playbook on how to combine Gen AI with Document AI for a multiplier effect.

This white paper explores the significant problems that arise from using raw, unstructured document data for LLM applications, and proposes a better way to ensure that your AI is built on a foundation of clarity and accuracy.

This article, including a demo video, uses an ABBY Vantage use case to demonstrate how these technologies can work together in a practical application, insurance claims automation.

Schedule a demo and see how ABBYY intelligent automation can transform the way you work—forever.