Vantage 3.0

Introducing a hybrid approach to using Document AI and GenAI

Supercharge AI automation with the power of reliable, accurate OCR

Increase straight-through document processing with data-driven insights

Integrate reliable Document AI in your automation workflows with just a few lines of code

PROCESS UNDERSTANDING

PROCESS OPTIMIZATION

Purpose-built AI for limitless automation.

Kick-start your automation with pre-trained AI extraction models.

Meet our contributors, explore assets, and more.

BY INDUSTRY

BY BUSINESS PROCESS

BY TECHNOLOGY

Build

Integrate advanced text recognition capabilities into your applications and workflows via API.

AI-ready document data for context grounded GenAI output with RAG.

Explore purpose-built AI for Intelligent Automation.

Grow

Connect with peers and experienced OCR, IDP, and AI professionals.

A distinguished title awarded to developers who demonstrate exceptional expertise in ABBYY AI.

Explore

Insights

Implementation

January 14, 2026

As enterprises accelerate their exploration of generative AI, one question has become consistently central to every single automation strategy:

Where do large language models (LLMs) genuinely elevate document workflows, and where does purpose-built intelligent document processing (IDP) remain essential?

This was the heart of the recent ABBYY x Ashling Webinar, titled “LLMs in Document Processing: Where They Fit and Where They Don’t.” The webinar was moderated by Slavena Hristova of ABBYY and featured an insightful mix of panelists, including Morgan Conque of Ashling, Nick Carr of ABBYY, and Apoorva Dawalbhakta of QKS Group. Each provided their unique perspectives on the development of customer expectations against technology evolution.

The discussion opened by recognizing the current prevalent dual sentiment that is shaping the current “enterprise AI” landscape, which is characterized by strong excitement around the capabilities of LLMs, and paired with the increasing need for adopting realism about what the production environments actually require. It was observed, and emphasised by the panelists, that many organizations have experimented with gen AI, and yet few have successfully operationalized it at scale.

Early in the discussion, panelists agreed that LLMs do introduce a new cognitive layer to “document understanding.” They can interpret specific narratives, understand the precise context, and generate summaries, way beyond the reach of traditional systems. But, it was also noted that enterprises still have a need for aspects such as deterministic accuracy, reproducible outputs, and auditability, as well as predictable cost structures... areas where LLMs are still maturing.

"IDP has always excelled at unlocking structured data from documents, but the real opportunity now is helping customers find deeper meaning and context in that data. They want to turn information into intelligence—making faster, smarter decisions with the right technologies and safeguards to handle complexity and drive meaningful automation."

Nick Carr, Director Pre-Sales EMEA, ABBYY

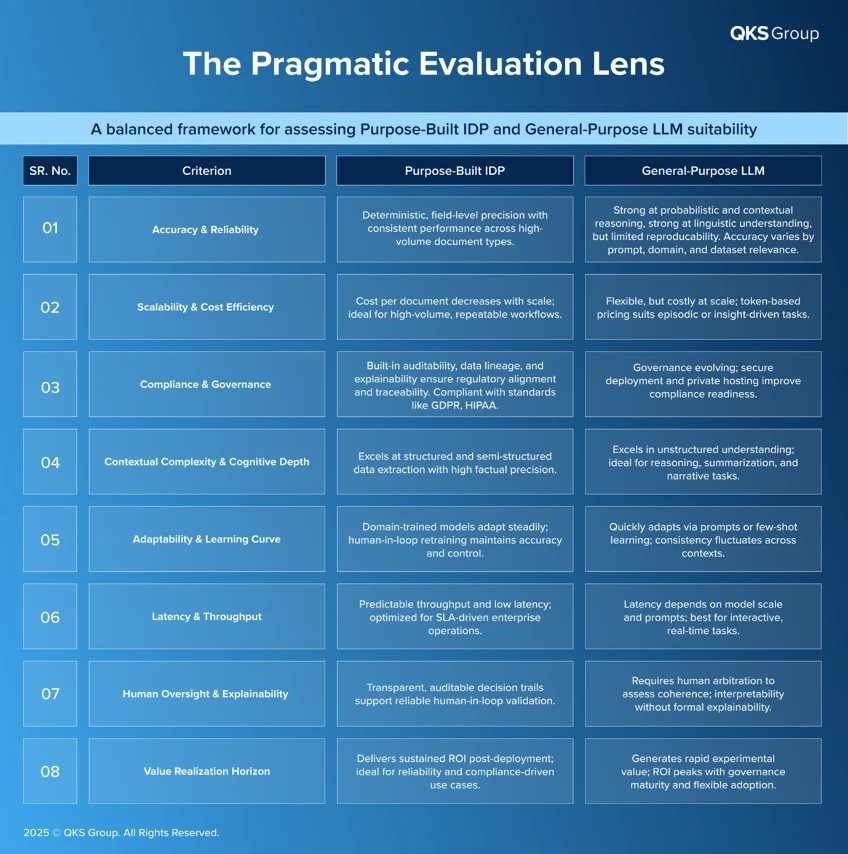

The session introduced a practical evaluation methodology for determining where and when IDP, LLMs, and hybrid models would fit the best. This structured approach, coined as the Pragmatic Evaluation Lens, developed by the QKS Group, essentially maps the critical workflows across eight distinct dimensions, thereby helping enterprises to align the exact type of task with the type of intelligence that is required.

The approach endeavors to replace the existing ambiguity of hype-driven experimentation with disciplined decision-making, thereby ensuring that the adoption is both governed and purpose-built.

What cumulatively came out of the discussion was that IDP continues to anchor aspects like accuracy and governance, while LLMs work toward enriching workflows with the required contextual interpretation. As such, mature organizations are observed to increasingly design hybrid architectures, where both the systems contribute with their strengths.

“Enterprises today are discovering that the real challenge is not choosing between IDP and LLMs, but understanding how to architect meaningful collaboration between them. While LLMs expand the cognitive horizon of document workflows, IDP on the other hand provides the reliability, governance, and precision that production environments actually demand.”

Apoorva Dawalbhakta, Associate Director Research, QKS Group

The most mature organizations out there are moving toward a “hybrid intelligence mindset,”—systems that interpret context, validate facts, and also act with accountability. Whether through structured evaluation models or domain-led design, the goal always should remain the same: aligning intelligence to intent, and building automation that is not only powerful, but also trustworthy."

As the conversation progressed, security considerations formed a significant part of the core of the discussion. It was observed by all panelists unanimously that, as enterprises increasingly consider using LLMs along with sensitive or regulated documents, issues around aspects of data retention, privacy, and transparency, as well as compliance, increasingly come to the forefront.

It was further established that IDP systems do provide inherent auditability, whereas LLMs do require controlled deployment models, for operating securely. The market is responding through private LLMs, retrieval-augmented pipelines, and robust governance frameworks, all of which makes scaling safer, rather than being riskier.

"Security and governance are the cornerstones of any enterprise-grade solution, ensuring trust, compliance, and accountability. At Ashling, we lead with secure-by-design principles—governed pipelines, encrypted data, and no public model access to sensitive information. By separating IDP and LLM layers and logging every interaction, we deliver precise, auditable systems that empower innovation with confidence."

Morgan Conque, Vice President of GTP Strategy, Ashling

The panel also explored how the human role is evolving in real-time. Instead of correcting mere system errors, humans now act as the actual “evaluators” of context, “arbiters” of reasoning, as also the “stewards” of responsible automation. This shift is becoming even more pronounced with the emergence of agentic automation, wherein the AI systems reason and collaborate autonomously, while humans define the intent, boundaries, and oversight.

Looking ahead, the panel further highlighted three emerging forces:

All in all, there was consensus that IDP and LLMs will remain distinct and independent technologies, but their interdependence will increasingly deepen, with more and more enterprises designing their architectures for orchestration instead of replacement.

The discussion ultimately underscored a clear shift: The future of “document intelligence” essentially lies in balancing deterministic precision along with generative cognition, which is ultimately governed by human intent and enterprise oversight. The organizations that master this orchestration would ultimately stand to achieve smarter automation outcomes, including greater accuracy, stronger governance, and meaningful efficiency gains, across the spectrum of their document workflows. In the end, the advantage would belong to the enterprises that treat intelligence not merely as a tool, but rather as a design principle.

You can access the full replay of the webinar here.